When you buy through links on our articles, Future and its syndication partners may earn a commission.

Credit: ServeTheHome

-

HBM is fundamental to the AI revolution as it allows ultra fast data transfer close to the GPU

-

Scaling HBM performance is difficult if it sticks to JEDEC protocols

-

Marvell and others wants to develop a custom HBM architecture to accelerate its development

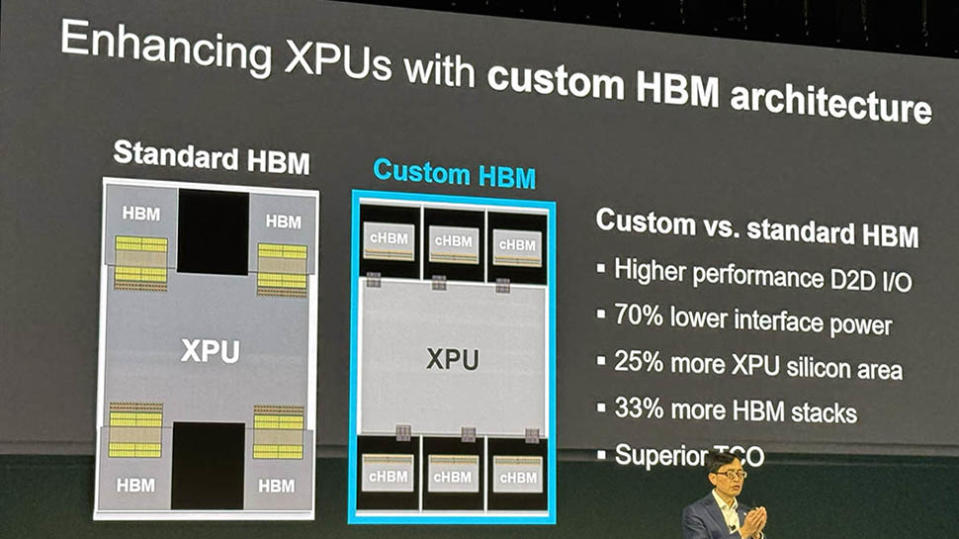

Marvell Technology has unveiled a custom HBM compute architecture designed to increase the efficiency and performance of XPUs, a key component in the rapidly evolving cloud infrastructure landscape.

The new architecture, developed in collaboration with memory giants Micron, Samsung, and SK Hynix, aims to address limitations in traditional memory integration by offering tailored solutions for next-generation data center needs.

Advertisement

Advertisement

The architecture focuses on improving how XPUs – used in advanced AI and cloud computing systems – handle memory. By optimizing the interfaces between AI compute silicon dies and High Bandwidth Memory stacks, Marvell claims the technology reduces power consumption by up to 70% compared to standard HBM implementations.

Moving away from JEDEC

Additionally, its redesign reportedly decreases silicon real estate requirements by as much as 25%, allowing cloud operators to expand compute capacity or include more memory. This could potentially allow XPUs to support up to 33% more HBM stacks, massively boosting memory density.

“The leading cloud data center operators have scaled with custom infrastructure. Enhancing XPUs by tailoring HBM for specific performance, power, and total cost of ownership is the latest step in a new paradigm in the way AI accelerators are designed and delivered,” Will Chu, Senior Vice President and General Manager of the Custom, Compute and Storage Group at Marvell said.

“We’re very grateful to work with leading memory designers to accelerate this revolution and, help cloud data center operators continue to scale their XPUs and infrastructure for the AI era.”

Advertisement

Advertisement

HBM plays a central role in XPUs, which use advanced packaging technology to integrate memory and processing power. Traditional architectures, however, limit scalability and energy efficiency.

Marvell’s new approach modifies the HBM stack itself and its integration, aiming to deliver better performance for less power and lower costs – key considerations for hyperscalers who are continually seeking to manage rising energy demands in data centers.

ServeTheHome’s Patrick Kennedy, who reported the news live from Marvell Analyst Day 2024, noted the cHBM (custom HBM) is not a JEDEC solution and so will not be standard off the shelf HBM.

“Moving memory away from JEDEC standards and into customization for hyperscalers is a monumental move in the industry,” he writes. “This shows Marvell has some big hyperscale XPU wins since this type of customization in the memory space does not happen for small orders.”

Advertisement

Advertisement

More in Business

The collaboration with leading memory makers reflects a broader trend in the industry toward highly customized hardware.

“Increased memory capacity and bandwidth will help cloud operators efficiently scale their infrastructure for the AI era,” said Raj Narasimhan, senior vice president and general manager of Micron’s Compute and Networking Business Unit.

“Strategic collaborations focused on power efficiency, such as the one we have with Marvell, will build on Micron’s industry-leading HBM power specs, and provide hyperscalers with a robust platform to deliver the capabilities and optimal performance required to scale AI.”

More from TechRadar Pro

EMEA Tribune is not involved in this news article, it is taken from our partners and or from the News Agencies. Copyright and Credit go to the News Agencies, email news@emeatribune.com Follow our WhatsApp verified Channel